on

Spec-Driven Development - Mind the Context Length

Spec-Driven Development and LLM Context Length

Spec-Driven Development helps us think through features, break them down, plan implementation, and validate our work. It makes AI Coding more manageable.

However, there is a nuanced relationship between SDD and Context Management. SDD can either become a token-hungry markdown burden or an ally that reduces the meandering requirements journey of vibe coding.

Single-Shot-Prompts to Single-Spec-Apps

The fundamental concepts backing SDD are not new. User stories and acceptance criteria help us articulate features better than rambling requirements documents. For example, Kiro uses EARS (Easy Approach to Requirements Syntax).

If we start with a specification for a very large feature or an entire application, we may struggle. Example: "automated shipment creation and vendor communication to real-time tracking and proactive issue resolution integrating with legacy components A and B.". This is akin to a single shot prompt, at a specification level.”

Even human engineers may not appreciate such large chunks all at once and we may often get an “I do not know if it will take a week or an eternity” estimate. The back-and-forth defining acceptance criteria can burn our project budget.

The coding agent has to do significant amount of work to break such a feature down into spec, plan, tasks, etc. and will have to repeatedly ask us for clarifications. For example, in GitHub Spec Kit, the spec can consume tokens quickly and show many “[NEEDS CLARIFICATION]” items that we must address. The “/plan” phase (which is generally time consuming) can quickly exhaust context window at this complexity level.

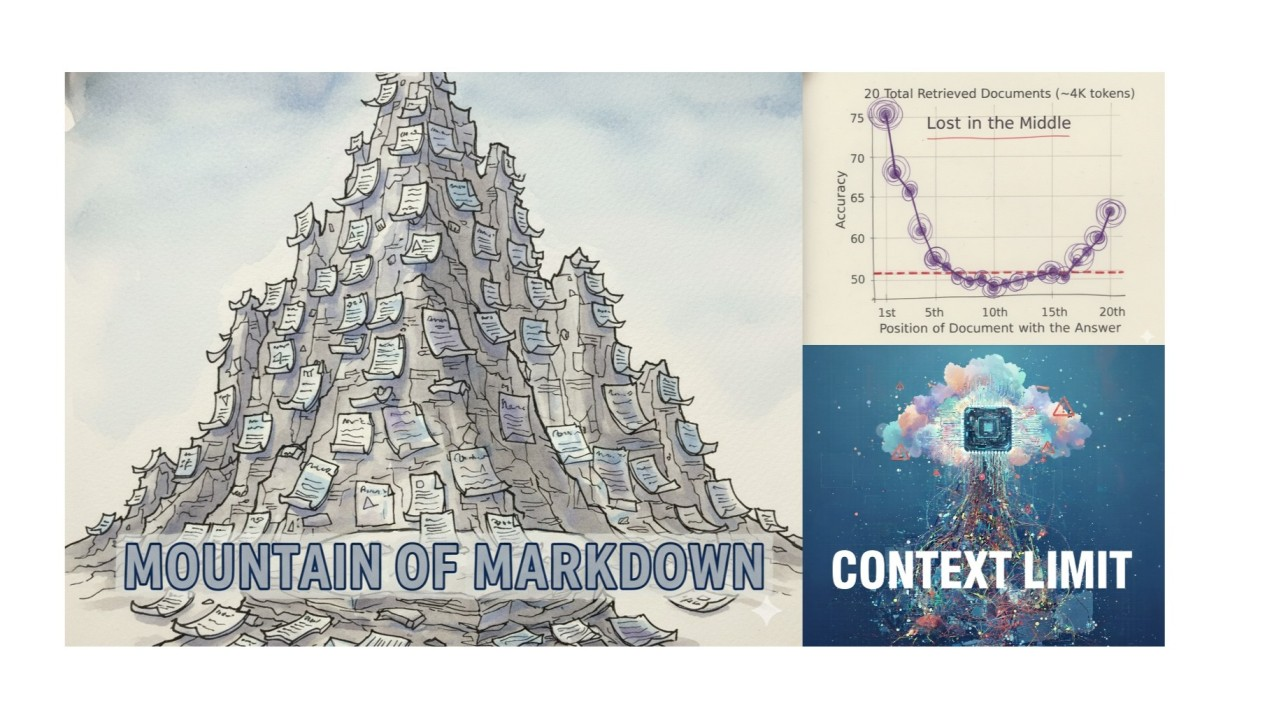

Mountain of Markdown

Even with top-tier plans for our coding agents and enough patience to power through clarifications, the sheer volume of generated Markdown can become impractical for us to review.

Vibe Specifications

Using coding agents to update specs is a good practice. Sometimes it is even better than spot-fixing by hand, since the agent may catch impacts on related points. This works as long as we review the changes.

But if our markdown files grow too long and we start auto-updating them because reading them becomes too time-consuming, we hit an Ironic Trap.

At this point we are vibe specifying, which only means there will be further deviations when we begin with implementing the tasks.

When AI writes and updates specifications without sufficient human review, we can lose track of intent. The whole point of SDD is clarity and intentionality. If we cannot meaningfully review specs because they are too long, too numerous, or too auto-generated, they are not serving their purpose. Any issues left uncaught will show up much bigger once the implementation is done.

Lost in the Middle

You may know the Stanford paper “Lost in the Middle: How Language Models Use Long Contexts” (Recommended Reading). SDD, in a way, helps engineer context to avoid the above issue and to address recency and primacy bias. However if the spec itself is too large, then even the process of specifying a feature can suffer from these issues.

Oversized specs risk this problem during all phases of feature development. We may notice forgotten clarifications and requirements drifting from the original direction despite validation. This loss in quality of the specification may defeat the purpose in itself.

Are tools to blame?

Tooling choice matters less if we are stuck in the issues above. We can debate which tool generates less markdown or switch agents between phases to optimise token usage, but this does not address the root cause. To an extent some tools can nudge us in the right direction to reduce the scope of a feature etc.

Tooling cannot save us from lack of structured thinking. Understanding how to decompose features always helps. SDD can accelerate the process, but whether the spec is right remains ours to review and approve.

Bite-Sized Features

“How do we know if a feature is too big? A simple heuristic: are the artefacts human reviewable? If we are skimming spec changes thinking "the AI probably got it right," the feature is too large.”

How do we know ahead of time? Often we don’t. Start specifying a feature, and if it is causing cognitive overload, break it down and restart. In early-stage development, restarting usually costs less than discovering a mismatch much later. We can also ask the coding agent to help us break down features at a high level.

Once we know a feature is too big, slicing it into meaningful pieces also requires some thought. Carving out meaningful chunks that delivery value when deployed is important as always. Once we slice a feature into stories, we can leverage concepts like INVEST to help us check if these stories are of good quality. It may be useful to revisit books like “User Stories Applied” to brush up on fundamentals. We can even feed these concepts to the coding agent to help it evaluate the size of a feature we are trying to spec.

Now we can prioritise these stories using techniques such as MoSCoW (Must Have, Should Have, Could Have, Would Have) to help arrive at a slice that covers the must-haves, and then reserve the rest for another day or another AI coding session.

Subagents for Managing Context Better

Subagents can help manage context length by keeping their own isolated context. In SDD, delegating research or validation to subagents can ease the overall burden. As an added bonus, we can also parallelise these tasks where possible. But this is an optimisation. first we need to get the feature size right.

Recap

SDD, like other approaches, can be done poorly and it is possible that this experience can have us dismiss it altogether. However IMHO giving it another shot with below points in mind can help achieve better outcome.

- Start small: Fully specify features in a line or two to start with; avoid long “and” chains.

- Specs are for humans first: If we cannot reasonably review a spec, it is too big.

- Slice meaningfully: Decompose features into valuable slices that can be delivered independently.

- Context matters: Understand our coding agent limitations and design specs to avoid overly long contexts.

I’d love feedback, questions, or pointers to your own experience with SDD.

Resources

- “Lost in the Middle: How Language Models Use Long Contexts”

- List of SDD tools of that I am using / trialing and watching

- “User Stories Applied”

Read the full article on LinkedIn →

Originally published on LinkedIn on October 24, 2025